-

사이킷런(sklearn)을 이용한 머신러닝 - 2 (xgboost)Machine Learning 2021. 3. 11. 13:23반응형

코드 사용전 꼭 설치바랍니다.

Anaconda prompt 에서 진행

conda install -c conda-forge graphviz

conda install -c conda-forge python-graphviz

pip install pydot

pip install pydotplus

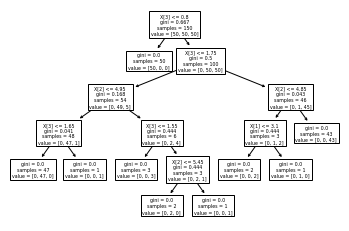

%matplotlib inline from sklearn.datasets import load_iris from sklearn.model_selection import cross_val_score from sklearn import tree clf = tree.DecisionTreeClassifier(random_state=0) iris = load_iris()clf = clf.fit(iris.data, iris.target) tree.plot_tree(clf)[Text(167.4, 199.32, 'X[3] <= 0.8\ngini = 0.667\nsamples = 150\nvalue = [50, 50, 50]'),

Text(141.64615384615385, 163.07999999999998, 'gini = 0.0\nsamples = 50\nvalue = [50, 0, 0]'),

Text(193.15384615384616, 163.07999999999998, 'X[3] <= 1.75\ngini = 0.5\nsamples = 100\nvalue = [0, 50, 50]'),

Text(103.01538461538462, 126.83999999999999, 'X[2] <= 4.95\ngini = 0.168\nsamples = 54\nvalue = [0, 49, 5]'),

Text(51.50769230769231, 90.6, 'X[3] <= 1.65\ngini = 0.041\nsamples = 48\nvalue = [0, 47, 1]'),

Text(25.753846153846155, 54.359999999999985, 'gini = 0.0\nsamples = 47\nvalue = [0, 47, 0]'),

Text(77.26153846153846, 54.359999999999985, 'gini = 0.0\nsamples = 1\nvalue = [0, 0, 1]'),

Text(154.52307692307693, 90.6, 'X[3] <= 1.55\ngini = 0.444\nsamples = 6\nvalue = [0, 2, 4]'),

Text(128.76923076923077, 54.359999999999985, 'gini = 0.0\nsamples = 3\nvalue = [0, 0, 3]'),

Text(180.27692307692308, 54.359999999999985, 'X[2] <= 5.45\ngini = 0.444\nsamples = 3\nvalue = [0, 2, 1]'),

Text(154.52307692307693, 18.119999999999976, 'gini = 0.0\nsamples = 2\nvalue = [0, 2, 0]'),

Text(206.03076923076924, 18.119999999999976, 'gini = 0.0\nsamples = 1\nvalue = [0, 0, 1]'),

Text(283.2923076923077, 126.83999999999999, 'X[2] <= 4.85\ngini = 0.043\nsamples = 46\nvalue = [0, 1, 45]'),

Text(257.53846153846155, 90.6, 'X[1] <= 3.1\ngini = 0.444\nsamples = 3\nvalue = [0, 1, 2]'),

Text(231.7846153846154, 54.359999999999985, 'gini = 0.0\nsamples = 2\nvalue = [0, 0, 2]'),

Text(283.2923076923077, 54.359999999999985, 'gini = 0.0\nsamples = 1\nvalue = [0, 1, 0]'),

Text(309.04615384615386, 90.6, 'gini = 0.0\nsamples = 43\nvalue = [0, 0, 43]')]

트리 : 정보이득 : 불순도 : {"gini" 계수, entropy}

# hyper parameter -> GridSearchCV

의사결정 트리를 만드는 이유 ( 기준점은 분산이 제일큰놈 )

- 비교를 적게하기 위하여

과적합, 변수의 순서를 달리하면 결과도 달라진다는게 문제

- max_depth : 몇개까지 나눌것인가

- min_saples_split : 노드를 나누기위한 최소한의 개수 2개! 끝단은 leaf

- min_sample_leaf : 하나의 노드가 되기 위한 최소한의 수

- hyper parameter 조합을 만들어서 테스트 : GridSearchCV

cross_val_score(clf, iris.data, iris.target, cv=10)array([1. , 0.93333333, 1. , 0.93333333, 0.93333333,

0.86666667, 0.93333333, 1. , 1. , 1. ])print(clf.get_n_leaves()) # 리프수9

clf.get_depth() # 최대 연결고리수5

clf.get_params() # default 값{'ccp_alpha': 0.0,

'class_weight': None,

'criterion': 'gini',

'max_depth': None,

'max_features': None,

'max_leaf_nodes': None,

'min_impurity_decrease': 0.0,

'min_impurity_split': None,

'min_samples_leaf': 1,

'min_samples_split': 2,

'min_weight_fraction_leaf': 0.0,

'presort': 'deprecated',

'random_state': 0,

'splitter': 'best'}print(iris.data.shape) # 행열 갯수 print(iris.feature_names) # 열이름(150, 4)

['sepal length (cm)', 'sepal width (cm)', 'petal length (cm)', 'petal width (cm)']import pandas as pd data = pd.DataFrame(iris.data) print(data.head())0 1 2 3

0 5.1 3.5 1.4 0.2

1 4.9 3.0 1.4 0.2

2 4.7 3.2 1.3 0.2

3 4.6 3.1 1.5 0.2

4 5.0 3.6 1.4 0.2clf.predict(data.iloc[1:150,:])array([0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1,

1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1,

1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2,

2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2,

2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2])

Pipeline 설계

from sklearn.pipeline import make_pipeline from sklearn.naive_bayes import MultinomialNB # bayes from sklearn.preprocessing import Binarizer # 경계값을 기준으로 0,1 나누는것 pipe = make_pipeline(Binarizer(), MultinomialNB())print(pipe.steps[0]) print(pipe[0]) #pipe['reduce_dim']('binarizer', Binarizer())

Binarizer()from sklearn.pipeline import Pipeline from sklearn.svm import SVC # support vector classifier from sklearn.decomposition import PCA # principle componet analysis estimators = [('reduce_dim', PCA()), ('clf', SVC())] # 이름지정 pipe = Pipeline(estimators) pipePipeline(steps=[('reduce_dim', PCA()), ('clf', SVC())])

print(pipe.steps[0]) # 0번은 PCA print(pipe[0])('reduce_dim', PCA())

PCA()pipe.set_params(clf__C=10) # 매개변수 전달Pipeline(steps=[('reduce_dim', PCA()), ('clf', SVC(C=10))])

GridSearchCV

import numpy as np def make_data(N, err=1.0,rseed=1): rng = np.random.RandomState(rseed) X = rng.rand(N,1) **2 y = 10-1. / (X.ravel() + 0.1) if err > 0: y += err * rng.randn(N) return X,y X, y = make_data(40) print(type(X))<class 'numpy.ndarray'>

from sklearn.model_selection import GridSearchCV from sklearn.preprocessing import PolynomialFeatures # 다차원축소 from sklearn.pipeline import make_pipeline from sklearn.linear_model import LinearRegression import numpy as np # 파이프 라인 리턴 def PolynomialRegression(degree=2, **kwargs): # dict 변동 매개변수 return make_pipeline(PolynomialFeatures(degree), LinearRegression(**kwargs)) param_grid = {'polynomialfeatures__degree': np.arange(21), #21 'linearregression__fit_intercept':[True, False], #2 'linearregression__normalize':[True, False]} #2 총 84개 조합테스트 param_grid{'polynomialfeatures__degree': array([ 0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16,

17, 18, 19, 20]),

'linearregression__fit_intercept': [True, False],

'linearregression__normalize': [True, False]}grid = GridSearchCV(PolynomialRegression(), param_grid,cv=7) # CV = Cross Validation 교차검증grid.fit(X,y)GridSearchCV(cv=7,

estimator=Pipeline(steps=[('polynomialfeatures',

PolynomialFeatures()),

('linearregression',

LinearRegression())]),

param_grid={'linearregression__fit_intercept': [True, False],

'linearregression__normalize': [True, False],

'polynomialfeatures__degree': array([ 0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16,

17, 18, 19, 20])})

best 조합

# 총 84개 조합테스트 grid.best_params_ #{'linearregression__fit_intercept': False,

'linearregression__normalize': True,

'polynomialfeatures__degree': 4}grid.best_estimator_ # BEST 조합!Pipeline(steps=[('polynomialfeatures', PolynomialFeatures(degree=4)),

('linearregression',

LinearRegression(fit_intercept=False, normalize=True))])grid.best_score_ # 제일 잘나온 확률0.8972710305736544

import matplotlib.pyplot as plt model = grid.best_estimator_ X_test = np.linspace(-0.1,1.1,500)[:,None] plt.scatter(X.ravel(), y) # ravel = 평평하게 1차원 lim = plt.axis() y_test = model.fit(X,y).predict(X_test) plt.plot(X_test.ravel(), y_test); plt.axis(lim);

import numpy as np import pandas as pd dataset= [10,12,12,13,12,11,14,13,15,10,10,10,100,12,14,13,102,105,123,125, 12,10, 10,11,12,15,12,13,12,11,14,13,15,10,15,12,10,14,13,15,10] outliers = [] def detect_outlier(data_1): threshold=3 mean_1 = np.mean(data_1) std_1 = np.std(data_1) for y in data_1: z_score= (y - mean_1)/std_1 # Z점수 if np.abs(z_score) > threshold: # threshold = 문지방 : 경계값 outliers.append(y) return outliers outlier_datapoints = detect_outlier(dataset) print(outlier_datapoints)[123, 125]

# scale = z점수 # robust_scale 평균 : median( 위치적 중위수 ) /IQR # -1 ~ 1 from sklearn.preprocessing import scale, robust_scale, minmax_scale , maxabs_scale print((np.arange(10, dtype = np.float) -3)) x = (np.arange(10, dtype = np.float)-3).reshape(-1,1) df = pd.DataFrame(np.hstack([x, scale(x), robust_scale(x), minmax_scale(x), maxabs_scale(x)]), columns = ["x", "scale(x)", "robust_scale(x)","minmax_scale(x)","maxabs_scale(x)"]) df.plot()[-3. -2. -1. 0. 1. 2. 3. 4. 5. 6.]

정규화 이유

정규화는 왜하는가? 변수 기여도를 동일하게 하기위해서

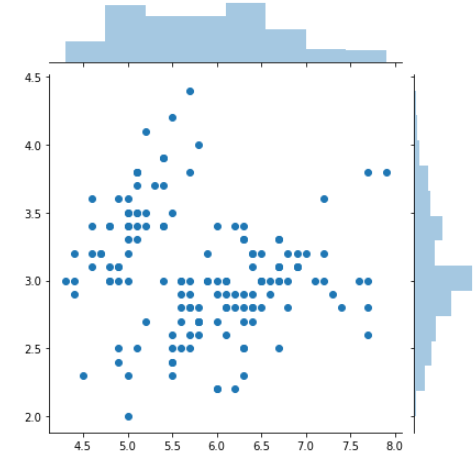

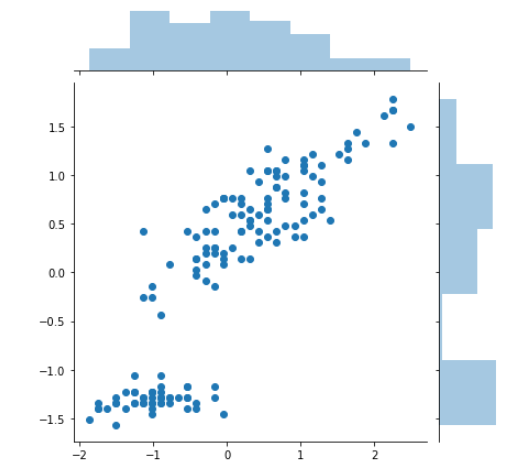

# 분포는 동일 import seaborn as sns from sklearn.datasets import load_iris iris = load_iris() print(type(iris)) data1 = iris.data data2 = scale(iris.data) print("전처리전 평균:", np.mean(data1, axis=0)) print("전처리전 std:", np.std(data1, axis=0)) print("전처리후 mean :", np.mean(data2, axis=0)) print("전처리후 std", np.std(data2, axis=0)) sns.jointplot(data1[:,0], data1[:,1]) plt.show() sns.jointplot(data2[:,0], data2[:,2]) plt.show()전처리전 평균: [5.84333333 3.05733333 3.758 1.19933333]

전처리전 std: [0.82530129 0.43441097 1.75940407 0.75969263]

전처리후 mean : [-1.69031455e-15 -1.84297022e-15 -1.69864123e-15 -1.40924309e-15]

전처리후 std [1. 1. 1. 1.]

정규분포로 변환

from sklearn.preprocessing import StandardScaler scaler =StandardScaler() # 인스턴스 과정이 필요함 scaler.fit(data1) data2 = scaler.transform(data1) # transformer(변환기), estimator(추정기) data1.std(), data2.std()(1.9738430577598278, 1.0)

One-hot Encoder

from sklearn.preprocessing import OneHotEncoder ohe = OneHotEncoder() X = np.array([[0],[1],[2]]) X ohe.fit(X) #print(ohe.n_values_, ohe.feature_indices_, ohe.active_features_) ohe.categories_[array([0, 1, 2])]

print(ohe.transform(X).toarray())[[1. 0. 0.]

[0. 1. 0.]

[0. 0. 1.]]# 입력 3자리 -> 10자리로 변경 X = np.array([[0,0,4],[1,1,0],[0,2,1],[1,0,2],[1,1,3]]) ohe = OneHotEncoder() ohe.fit(X) print(ohe.transform(X).toarray()) # 리스트를 보여줘라[[1. 0. 1. 0. 0. 0. 0. 0. 0. 1.]

[0. 1. 0. 1. 0. 1. 0. 0. 0. 0.]

[1. 0. 0. 0. 1. 0. 1. 0. 0. 0.]

[0. 1. 1. 0. 0. 0. 0. 1. 0. 0.]

[0. 1. 0. 1. 0. 0. 0. 0. 1. 0.]]

Label Encoder

# 라벨인코더~ from sklearn.preprocessing import LabelEncoder le = LabelEncoder() le.fit([1,2,2,6]) le.classes_array([1, 2, 6])

le.transform([1,1,2,6])array([0, 0, 1, 2], dtype=int64)

le.inverse_transform([0,0,1,2])array([1, 1, 2, 6])

# 다음 데이터를 인코딩하시오. a = ["서울","서울","대전","부산"] le.fit(a) le.classes_a_a = le.transform(a) a_aarray([2, 2, 0, 1], dtype=int64)

le.inverse_transform(a_a)array(['서울', '서울', '대전', '부산'], dtype='<U2')

Dictionary vectorizer

# dict ~ from sklearn.feature_extraction import DictVectorizer v = DictVectorizer(sparse=False) D = [{'foo' : 1, 'bar' : 2}, {'foo' : 3, 'baz' : 1}] X = v.fit_transform(D) X # 'bar' , 'baz', 'foo' # 2 0 1 # 0 1 3array([[2., 0., 1.],

[0., 1., 3.]])v.feature_names_['bar', 'baz', 'foo']

v.inverse_transform(X)[{'bar': 2.0, 'foo': 1.0}, {'baz': 1.0, 'foo': 3.0}]

결측치 처리

# 결측치 처리 from sklearn.impute import SimpleImputer imp_mean = SimpleImputer(missing_values = np.nan, strategy = 'mean') # median, most_frequency(최빈값) imp_mean.fit([[7,2,3],[4,np.nan,6],[10,5,9]]) X = [[np.nan,2,3], [4,np.nan,6], [10,np.nan,9]] print(imp_mean.transform(X))[[ 7. 2. 3. ]

[ 4. 3.5 6. ]

[10. 3.5 9. ]]** tip

이렇게 평균값으로 대입가능하고, 머신러닝을 통해서 예측한 결과값을 다시 독립변수로 사용해도 됩니다.

he = OneHotEncoder() ohe.fit([["서울"],["서울"],["대전"],["부산"]]) ohe.transform([["서울"],["서울"]]).toarray()array([[0., 0., 1.],

[0., 0., 1.]])X = np.arange(6).reshape(3,2) Xarray([[0, 1],

[2, 3],

[4, 5]])# [1,a,b,a^2,ab,b^2] -> 비선형회귀 poly = PolynomialFeatures(2) poly.fit_transform(X)array([[ 1., 0., 1., 0., 0., 1.],

[ 1., 2., 3., 4., 6., 9.],

[ 1., 4., 5., 16., 20., 25.]])

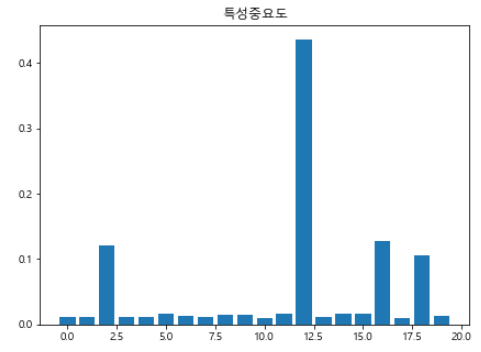

Ensemble(앙상블)

RandomForest : DT를 여러개의 모델로 구축해서

연속형 : 결과값의 평균으로 예측

이산형 : 결과값의 투표를 통해서 결정from sklearn.datasets import make_classification X,y = make_classification(1000) from sklearn.ensemble import RandomForestClassifier rf = RandomForestClassifier(n_estimators=30) # rf.fit(X,y)RandomForestClassifier(n_estimators=30)

print("Accuracy:\t", (y == rf.predict(X)).mean())Accuracy: 1.0

한글폰트 사용

import matplotlib.pyplot as plt # 폰트설정 plt.rcParams['font.family'] = 'Malgun Gothic'# %matplotlib inline # import matplotlib.pyplot as plt f, ax = plt.subplots(figsize=(7,5)) ax.bar(range(0, len(rf.feature_importances_)), rf.feature_importances_) ax.set_title("특성중요도")

print("특성수", rf.n_features_) print("모델", rf.estimators_)특성수 20

모델 [DecisionTreeClassifier(max_features='auto', random_state=1395913497), DecisionTreeClassifier(max_features='auto', random_state=119341817), DecisionTreeClassifier(max_features='auto', random_state=1822058090), DecisionTreeClassifier(max_features='auto', random_state=522443302), DecisionTreeClassifier(max_features='auto', random_state=1597311244), DecisionTreeClassifier(max_features='auto', random_state=845995259), DecisionTreeClassifier(max_features='auto', random_state=101932588), DecisionTreeClassifier(max_features='auto', random_state=1791944844), DecisionTreeClassifier(max_features='auto', random_state=1257671594), DecisionTreeClassifier(max_features='auto', random_state=1278114999), DecisionTreeClassifier(max_features='auto', random_state=1628617292), DecisionTreeClassifier(max_features='auto', random_state=1995819024), DecisionTreeClassifier(max_features='auto', random_state=1723248772), DecisionTreeClassifier(max_features='auto', random_state=1122722585), DecisionTreeClassifier(max_features='auto', random_state=808735041), DecisionTreeClassifier(max_features='auto', random_state=670881162), DecisionTreeClassifier(max_features='auto', random_state=1642206646), DecisionTreeClassifier(max_features='auto', random_state=546048640), DecisionTreeClassifier(max_features='auto', random_state=1902826129), DecisionTreeClassifier(max_features='auto', random_state=2062332984), DecisionTreeClassifier(max_features='auto', random_state=1629183554), DecisionTreeClassifier(max_features='auto', random_state=97631387), DecisionTreeClassifier(max_features='auto', random_state=2020975063), DecisionTreeClassifier(max_features='auto', random_state=856768912), DecisionTreeClassifier(max_features='auto', random_state=1578801749), DecisionTreeClassifier(max_features='auto', random_state=1590223484), DecisionTreeClassifier(max_features='auto', random_state=1240823731), DecisionTreeClassifier(max_features='auto', random_state=164031397), DecisionTreeClassifier(max_features='auto', random_state=1729621726), DecisionTreeClassifier(max_features='auto', random_state=751674714)]# 문제 : load_boston() 을 이용해 데이터를 로딩하고 rf로 변수중요도를 출력하시오. from sklearn.datasets import load_boston # 회귀 or 분류 from sklearn.ensemble import RandomForestRegressor # RandomForestRegressor = 연속형 데이터이기 a = load_boston() X = a.data y = a.target names = a["feature_names"] rf = RandomForestRegressor() # rf.fit(X,y)RandomForestRegressor()

print(sorted(zip(map(lambda x: round(x,2), rf.feature_importances_), names),reverse = True))[(0.49, 'RM'), (0.32, 'LSTAT'), (0.07, 'DIS'), (0.04, 'CRIM'), (0.03, 'NOX'), (0.02, 'PTRATIO'), (0.01, 'TAX'), (0.01, 'INDUS'), (0.01, 'B'), (0.01, 'AGE'), (0.0, 'ZN'), (0.0, 'RAD'), (0.0, 'CHAS')]

import matplotlib.pyplot as plt f, ax = plt.subplots(figsize = (7,5)) ax.bar(range(0,len(rf.feature_importances_)), rf.feature_importances_) ax.set_title("feature importance")

연속형 데이터 평가

일반적인 mse 평가

from sklearn.metrics import mean_squared_error, mean_absolute_error,r2_score mean_squared_error(y, rf.predict(X))1.6167176877470346

mae 평가

mean_absolute_error(y, rf.predict(X))R2 score 평가

r2_score(y, rf.predict(X))from sklearn.ensemble import RandomForestClassifier from sklearn.datasets import load_breast_cancer from sklearn.model_selection import train_test_split # 0.75 : 0.25 cancer = load_breast_cancer() X_train, X_test, y_train, y_test = train_test_split(cancer.data, cancer.target, random_state=42) forest = RandomForestClassifier(n_estimators = 100, random_state=42) forest.fit(X_train, y_train)RandomForestClassifier(random_state=42)

predict

# predict : score 함수 / 꼭 해야함!!!! print('훈련 세트 정확도 {:.3f}'.format(forest.score(X_train,y_train))) print('테스트 세트 정확도 {:.3f}'.format(forest.score(X_test,y_test)))훈련 세트 정확도 1.000

테스트 세트 정확도 0.965from sklearn.tree import export_graphviz export_graphviz(forest.estimators_[0], out_file="tree.dot", class_names=["악성","양성"], feature_names=cancer.feature_names,impurity=False, filled=True)from IPython.display import display import graphviz # 읽기용으로! with open("tree.dot", "rt", encoding='UTF-8') as f: dot_graph = f.read() display(graphviz.Source(dot_graph))

XGBoost를 이용한 예측

import pandas as pd from sklearn.datasets import load_boston boston = load_boston() # data (독립변수) , target(종속변수) data = pd.DataFrame(boston.data) data.columns = boston.feature_names # 열이름 print(data.head()) data['PRICE'] = boston.target # PRICE 로 열추가 print(data.info()) data.describe()<class 'pandas.core.frame.DataFrame'> RangeIndex: 506 entries, 0 to 505 Data columns (total 14 columns): # Column Non-Null Count Dtype --- ------ -------------- ----- 0 CRIM 506 non-null float64 1 ZN 506 non-null float64 2 INDUS 506 non-null float64 3 CHAS 506 non-null float64 4 NOX 506 non-null float64 5 RM 506 non-null float64 6 AGE 506 non-null float64 7 DIS 506 non-null float64 8 RAD 506 non-null float64 9 TAX 506 non-null float64 10 PTRATIO 506 non-null float64 11 B 506 non-null float64 12 LSTAT 506 non-null float64 13 PRICE 506 non-null float64 dtypes: float64(14) memory usage: 55.5 KB None

import xgboost as xgb # model set! from sklearn.metrics import mean_squared_error # 평가 set X,y = data.iloc[:,:-1],data.iloc[:,-1] data_dmatrix = xgb.DMatrix(data=X, label= y) #xgb전용 행렬 모델임.!! 꼭해야함 data = 독립변수, label = 종속변수 # 데이터프레임은 ndarray + dict (순서를 보장, 중복을 허용)from sklearn.model_selection import train_test_split import numpy as np X_train, X_test, y_train, y_test = train_test_split(X,y, test_size=0.2,random_state=123) xg_reg = xgb.XGBRegressor(objective = 'reg:linear', # 선형회귀방식 colsample_bytree = 0.3, # learning_rate = 경사하강법 등장 # optimization 을 찾기위해 경사에서 제일 아래인곳 찾는 learning_rate = 0.1, max_depth= 5, alpha = 10, # max_depth 는 과적합방지 n_estimators = 10) # model은 10개로만들어라 xg_reg.fit(X_train,y_train) preds = xg_reg.predict(X_test) # ybar = 예측치 rmse = np.sqrt(mean_squared_error(y_test,preds)) print("RMSE: %f" % (rmse))import matplotlib.pyplot as plt xgb.plot_tree(xg_reg,num_trees=0) plt.rcParams['figure.figsize']=[500,200] plt.show()

from numpy import loadtxt from xgboost import XGBClassifier from sklearn.metrics import accuracy_scoredataset = loadtxt('pima.data', delimiter=",") X = dataset[:,0:8] Y = dataset[:,8] seed = 7 test_size = 0.33 X_train, X_test, y_train, y_test = train_test_split(X,Y, test_size = test_size, random_state = seed) model = XGBClassifier() model.fit(X_train, y_train) print(model)from xgboost import plot_importance from matplotlib import pyplot plot_importance(model) plt.rcParams['figure.figsize']=[50,20] plt.show()

y_pred = model.predict(X_test) print(y_pred)[0. 1. 1. 0. 1. 1. 0. 0. 1. 0. 1. 0. 1. 1. 0. 0. 0. 1. 0. 0. 0. 0. 1. 1.

0. 0. 0. 0. 0. 1. 1. 0. 0. 0. 0. 1. 0. 0. 1. 0. 1. 1. 1. 0. 0. 0. 1. 1.

0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 1. 1. 1. 0. 0. 1. 1. 1. 1.

0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 1. 1. 1. 0. 1. 0. 1. 0. 1. 0. 0. 1.

1. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 1. 0. 1. 0. 0. 1. 1. 0. 0. 0. 1.

0. 0. 0. 0. 0. 1. 0. 1. 0. 0. 0. 1. 0. 0. 0. 0. 0. 0. 0. 1. 0. 1. 0. 0.

0. 0. 1. 0. 1. 0. 0. 1. 0. 0. 0. 0. 1. 0. 0. 0. 0. 0. 0. 0. 1. 0. 1. 0.

0. 1. 0. 1. 0. 0. 1. 0. 1. 0. 1. 0. 1. 0. 0. 0. 1. 1. 0. 0. 0. 1. 1. 0.

1. 1. 0. 0. 1. 0. 0. 1. 0. 0. 1. 1. 1. 0. 1. 0. 0. 0. 1. 1. 0. 0. 1. 0.

0. 1. 0. 0. 0. 1. 1. 0. 1. 1. 0. 1. 1. 0. 1. 1. 0. 0. 0. 0. 0. 1. 1. 0.

0. 1. 0. 0. 1. 0. 1. 0. 0. 0. 0. 1. 0. 1.]accuracy = accuracy_score(y_test,y_pred) print("정확도 : %.2f%%" % (accuracy * 100.0))정확도 : 74.02%

from sklearn.feature_selection import SelectFromModel thresholds = np.sort(model.feature_importances_) # 오름차순 print(thresholds)[0.08799455 0.08907107 0.09801765 0.09824965 0.09959184 0.13577047

0.15170811 0.23959671]# 변수 선택법 for thresh in thresholds: selection = SelectFromModel(model, threshold = thresh, prefit =True) select_X_train = selection.transform(X_train) # 경계선 이하의 중요성을 가진 변수 제거 selection_model = XGBClassifier() selection_model.fit(select_X_train, y_train) select_X_test = selection.transform(X_test) # 테스터용 데이터변환 y_pred = selection_model.predict(select_X_test) predictions = [round(value) for value in y_pred] accuracy = accuracy_score(y_test, predictions) print("Thresh =%.3f, n=%d, Accuracy: %.2f%%" % (thresh, select_X_train.shape[1], accuracy*100.0))import pickle pickle.dump(model, open("pima.pickle.dat", "wb")) # 모델 저장하기 loaded_model = pickle.load(open("pima.pickle.dat", "rb")) # 모델 불러오기 y_pred = loaded_model.predict(X_test) predictions = [round(value) for value in y_pred] accuracy = accuracy_score(y_test,predictions) print("Accuracy: %.2f%%" % (accuracy * 100.0))정리

- pipeline # 앞에서 전처리후에 파이프를 통해서 뒷단으로 넘기는것 전처리 하기전에 분리!

!pipeline.(이름__Parameter) - GridSeachCV # 노가다 안할라면~ Parameter(조합) 튜닝할때 사용 조합을 만들어서 테스트해줌.

- Dicision Tree

- Regression

- Classifier 3-1. Random Forest

- 3-2. XGBoost

- DMatrix 전용행렬

- 시각화

- 변수중요도

- Preprocessing . z점수(score)평균으로 빼고 표준편차로 나눈것, minmax, robust(IQR로 나눠줌)

평가

- 평가 분류 : Confusion_matrix(시각화) / Classification_report(분류평가보고서)

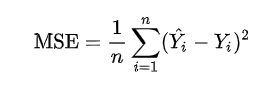

- 평가 예측 : MSE(오차의제곱 후 root), RMSE(표준편차) 두개다 수치가 작을수록 좋은 모델

반응형'Machine Learning' 카테고리의 다른 글

배치 크기(batch size)를 늘리는 방법 (0) 2023.04.04 (object detection)YOLOv5 학습예제(마스크데이터셋) (4) 2021.06.23 사이킷런(sklearn)을 이용한 머신러닝 - 4 (분류) (0) 2021.03.13 사이킷런(sklearn)을 이용한 머신러닝 - 3 (군집,분류) (0) 2021.03.12 사이킷런(sklearn)을 이용한 머신러닝 - 1 (0) 2021.03.10